Nomic AI launched an embedding mannequin with a multi-stage coaching pipeline, Nomic Embed, an open-source, auditable, and high-performing textual content embedding mannequin. It additionally has an prolonged context size supporting duties reminiscent of retrieval-augmented-generation (RAG) and semantic search. The present in style fashions, together with OpenAI’s text-embedding-ada-002, lack openness and auditability. The mannequin addresses the problem of growing a textual content embedding mannequin that outperforms present closed-source fashions.

Present state-of-the-art fashions dominate long-context textual content embedding duties. Nevertheless, their closed-source nature and unavailability of coaching knowledge for auditability pose limitations. The proposed answer, Nomic Embed, offers an open-source, auditable, and high-performing textual content embedding mannequin. Nomic Embed’s key options embody an 8192 context size, reproducibility, and transparency.

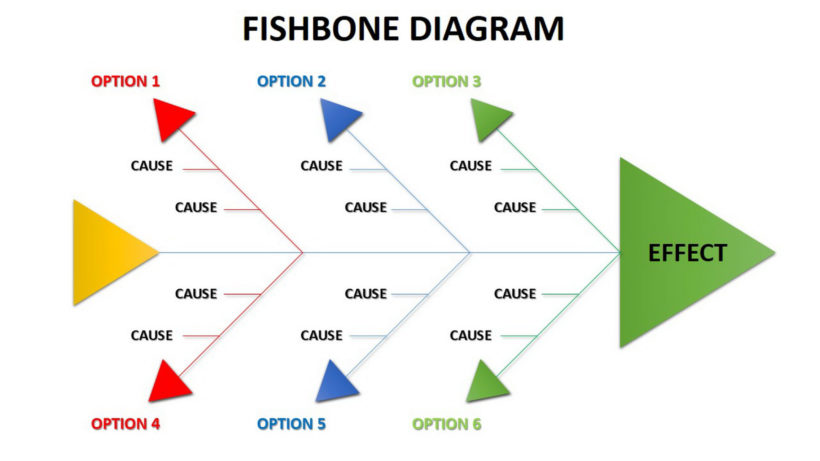

Nomic Embed is constructed via a multi-stage contrastive studying pipeline. It begins with coaching a BERT mannequin with a context size of 2048 tokens, named nomic-bert-2048, with modifications impressed by MosaicBERT. The coaching includes:

- Rotary place embeddings,

- SwiGLU activations,

- Deep velocity and FlashAttention,

- BF16 precision.

It used vocabulary with elevated dimension and a batch dimension of 4096. The mannequin is then contrastively educated with ~235M textual content pairs, guaranteeing high-quality labeled datasets and hard-example mining. Nomic Embed outperforms present fashions on benchmarks just like the Huge Textual content Embedding Benchmark (MTEB), LoCo Benchmark, and the Jina Lengthy Context Benchmark.

Nomic Embed not solely surpasses closed-source fashions like OpenAI’s text-embedding-ada-002 but additionally outperforms different open-source fashions on numerous benchmarks. The emphasis on transparency, reproducibility, and the discharge of mannequin weights, coaching code, and curated knowledge showcase a dedication to openness in AI improvement. Nomic Embed’s efficiency on long-context duties and the decision for improved analysis paradigms underscore its significance in advancing the sphere of textual content embeddings.

Pragati Jhunjhunwala is a consulting intern at MarktechPost. She is presently pursuing her B.Tech from the Indian Institute of Know-how(IIT), Kharagpur. She is a tech fanatic and has a eager curiosity within the scope of software program and knowledge science purposes. She is all the time studying concerning the developments in several area of AI and ML.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25234829/246957_VDAY_GIFTGUIDE_CVirginia.jpg)